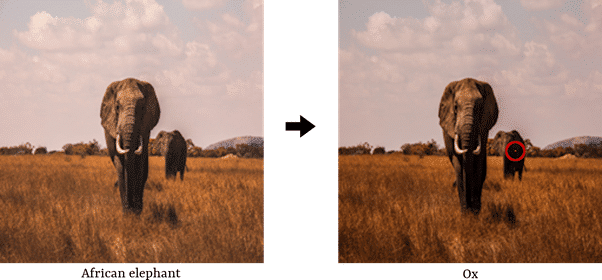

Like many other artificial intelligence solutions, deepfake AI has improved a lot over the last few years. Using a single image of a target it is already possible to make a whole video out of it (Siarohin et al, 2019). The quality of models is also already at a level where human detection is about as good as simply guessing (Rossler et al, 2019). While this is a great achievement from a technological perspective, many people are also worried about potential abuse. And not without reason, criminals have already used AI-based software to impersonate an executive and make employees transfer more than 200,000 euros into their account (Stupp, 2019). Luckily, AI could also be a solution to this problem, as various deep learning models have been developed to detect videos that have been altered using deepfake technology (Rossler et al, 2019). While this is great news it does not solve the issue overnight, as another program exists that exploits the pattern recognition of the detection AI to perform an adversarial attack (Hussain et al, 2021). This can be done by adding some noise to the image or even a couple pixels to trick the pattern recognition of deep neural networks.

If the detection AI is open source, it is quite easy to create such an attack: you take the deepfake video and randomly alter pixels until you find a combination where the algorithm is no longer able to detect the deepfake video. However, even when the detection software is not in the public domain it is still possible (although more complicated) to create an attack (Hussain et al, 2021). Because black-box attacks are also possible a cat a mouse game is created whereby the detection AI tries to spot the attack and the attacker evolves to dodge these detections. For now the future of the detection AI is uncertain, but my guess would be that eventually the detector will win. Large tech companies are heavily incentivized to solve this issue, especially with increasing pressure from legislators. Nefarious actors on the other hand have much less incentive to dodge the detection: simpler and cheaper options like social engineering or outright lies tend to do the job about as well.

Bibliography

Hussain, S., Neekhara, P., Jere, M., Koushanfar, F., & McAuley, J. (2021). Adversarial deepfakes: Evaluating vulnerability of deepfake detectors to adversarial examples. In Proceedings of the IEEE/CVF winter conference on applications of computer vision (pp. 3348-3357).

Rossler, A., Cozzolino, D., Verdoliva, L., Riess, C., Thies, J., & Nießner, M. (2019). Faceforensics++: Learning to detect manipulated facial images. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 1-11).

Siarohin, A., Lathuilière, S., Tulyakov, S., Ricci, E., & Sebe, N. (2019). First order motion model for image animation. Advances in Neural Information Processing Systems, 32.

Stupp, C., 2019. Fraudsters Used AI to Mimic CEO’s Voice in Unusual Cybercrime Case. The Wall Street Journal. Available at: <http://www.wsj.com/articles/fraudsters-use-ai-to-mimic-ceos-voice-in-unusual-cybercrime-case-11567157402>

I think that deepfakes are one of the big problems that AI has created. It has many significant risks for especially critical sectors and high level political environments. This becomes clear when looking at one of the more current cases where a person using deepfake material of the president of Ukraine entered high level political discussions. Yes, people noticed rather quickly that something was not right, but then again, looking at the quality of the deepfake, it was also not one of the best compared to other demonstrations I have seen in the past. But as with other technological advances, risks always follows. I think in the future that will require, that all platforms and software applications that use some kind of image/video, will need to be equipped with advanced detection tools. This then enables everyone in the population to directly spot these fakes. I assume it is a similar dynamic as with normal computer viruses. It will be a constant catching up with the opposite site. Lets hope that the detection side wins when it comes to preventing harm using deepfakes.